Today you get my home-cooked ramblings on game theory, and I'll be straying a bit from game theory as such to an application that interests me. If you really want to learn more about mixed strategies try the links.

Mixed strategies in game theory fascinate me. The basic premise of a mixed strategy is that sometimes there is no

Nash equilibrium, no single choice of strategies between two players that is always the best either can do, without

cooperation or

coordination of the other player. In these cases there is still an equilibrium, but it exists as a random mix between two (

maybe more) choices for each player.

Bluffing in poker

Bluffing in poker is a good example of a mixed strategy. Bluff too often and other players will be more likely to call your bluff. Do not bluff enough, and other players will recognize when you are playing a strong hand (

It's more complicated than that, because poker involves a lot of signaling too). Play poker with the right mix of bluffing and straight, and do these randomly without tipping other players to your choice, and you will be playing at a Nash equilibrium.

I have a proposition, an agenda for this blog that I am working towards, and I have most of the pieces in place to start discussing it seriously:

I think mixed strategies can help describe how people actually play games, and how well they play them.

Typical game theory examples are usually very simple, there are generally 2 or 3 strategies and one of them might be the best (optimal). Games we like to play are not so simple. Consider that a player of boardgame (

or card game, or a sport) might have multiple strategies, multiple moves for each piece on the board, and the total number of choice might be very large. Finding the best move might not be a trivial problem (

a topic for another day).

Here is the obvious part: more skillful players will be better at picking out good move or strategies. A perfect player would always make the best available choice, but less skillful players will tend to make less-than-optimal choices, possibly because they have imperfect knowledge of how the game works.

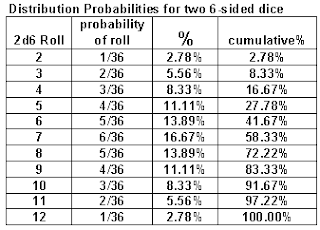

Information clearly plays an important role, so let's consider an unskilled player, someone new to a game that knows nothing at all and essentially chooses strategies at random. This might also be a computer program that literally plays randomly (

Note: Some early versions of computer games such as chess played essentially random games like this.).

Now the less obvious: I'm departing from the concept of an optimal mixed strategy, to random play that is very far from optimal. In my own notes I've been referring to this as "random strategy", thought perhaps "blind strategy" is more apt, since the player uses no information about the game. This sort of random strategy might be a yardstick to gauge the ability non-random players; a baseline for comparison. Note that it is possible to play worse-than-random, but to do some requires information about the game and consistent bad choices, and most human players can probably do better than this even on their first try at a new game. [

Image by Holger Meinhardt]

Information leads to opportunity for good choice, and good choices lead to good play. Player skill might be interpreted as the ability to take advantage of the available information and choose better strategies. So here is the conclusion of this ramble: Information is a statistically measurable quantity, so it should be possible to consider how much information is available to a player and how well they are able to make use of it (

how much better than random). What I'm working towards here is (

I think) a statistical measure called a likelihood ratio. A trivial example might be a game with two choices, one winning and the other losing, and the player must use some information to guess the winning choice. The random (blind) player will win 50% of the time, but the informed (

yet imperfect) player should win more often than that, let’s say 75% of the time. The likelihood ratio would then be 0.75/0.50 = 1.5.